So your team’s SEO efforts have paid off well — your site ranks well, at the expense of your staging environment also popping up in search results. You don’t want your staging environment to be publicly discoverable, or displace your production webpages when someone does a simple search.

Here are some ways you can guarantee that staging only reaches its intended audience: your team.

Deferring crawlers with Robots.txt

Your site’s robots.txt file defines rules for crawlers, telling them what files they should index, and how often they should re-crawl your site. Your team has probably customized/optimized your main site’s robots file, but staging’s might be an easy oversight.

Excluding staging from robots.txt altogether is perhaps the most important step you can take to bury it from search engines. You’ll want to write a separate robots for staging, and serve it only to your staging domain (you might do this conditionally by checking your STAGING/ PROD environment variables, or use a config management tool like Ansible to handle). But first: make sure your robots.txt is getting served to begin with. You should be able to access it by going to {{ your domain }}/robots.txt

All you need in your staging environment’s robots.txt file is:

User-agent: *

Disallow: /

Unfortunately, some crawlers ignore robots files — a robots file is a suggestion, not a rule to follow. For these less common cases, some site admins will set up hidden pages and IP-block any visitors.

Removing your sitemap

Your main sitemap (likely sitemap.xml in your site’s root directory) may dynamically update to feature all live pages. If you’re carrying over the same configuration to your staging environment, it might have a public sitemap as well. A sitemap invites search engines to index any pages listed, which is exactly what you don’t want to happen for staging.

This is an easy solution: you don’t need a sitemap for staging, so you can just delete it. Depending on how its configured, your staging site could even have your production site’s sitemap, in which case you can keep it.

Pointing canonical URLs to your production site

Canonical meta tags tell search engines the origin of a page’s content. This origin should always point to your main site — otherwise, this causes a conflict and makes it trickier for search engines to determine which page to prioritize. If you autogenerate your canonical tags, those for staging pages might denote the staging environment as the canonical.

If you have a publicly-accessible staging environment, this is crucial to fix: the last thing you need is for staging’s copy of a blog post to outrank the original.

The remedy here? Always set canonical to your production domain followed by a page’s slug.

<link rel="canonical" href="{{ .Site.Params.baseUrl }}{{ .RelPermalink }}" />

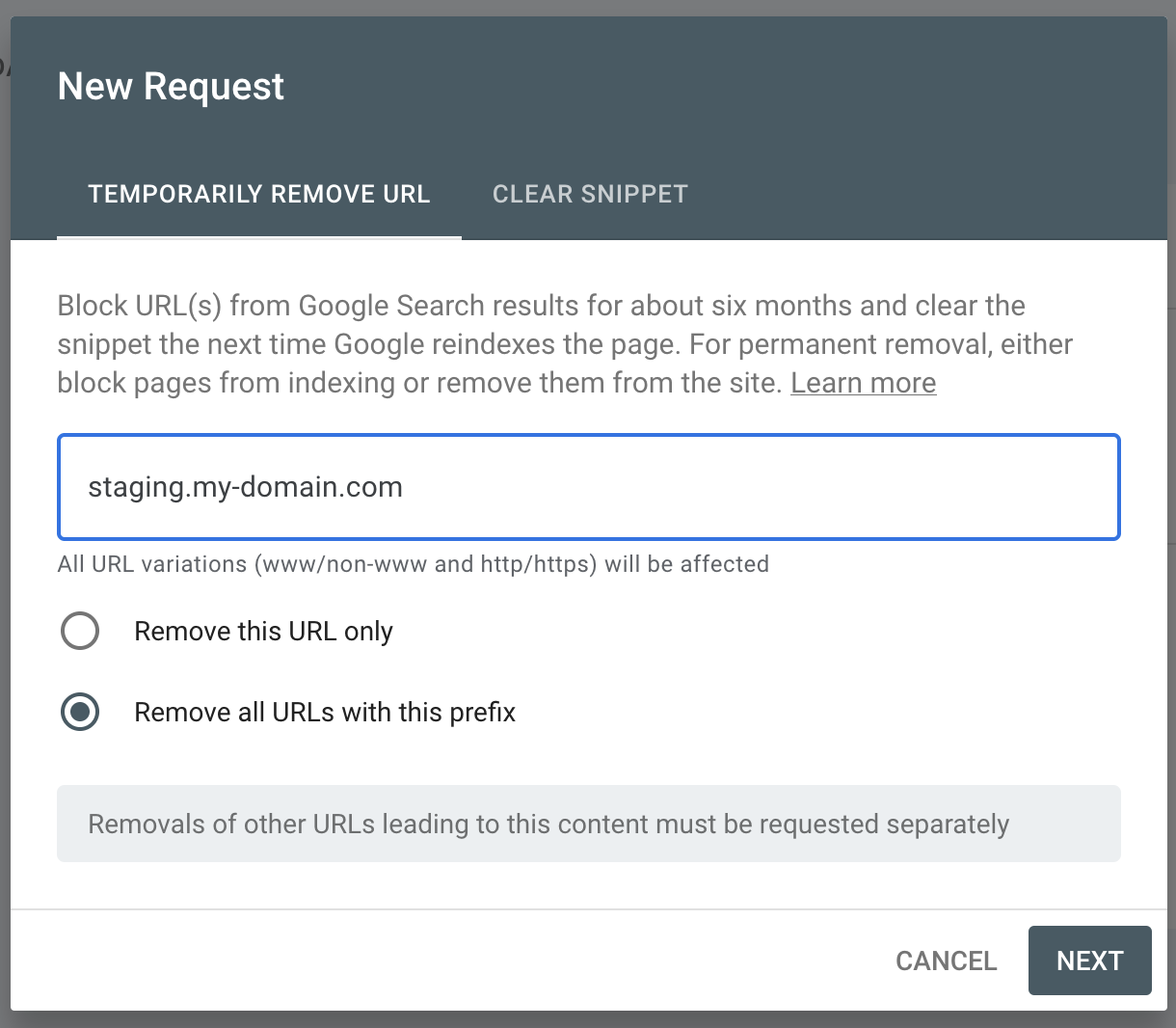

Remove from Google Search Console

Did your staging environment get indexed? Given you’re registered as the respective site owner in Google Search Console, you can request URLs (or entire domain prefixes) to get un-indexed for six months using the Removals tool. This isn’t a permanent solution, however it can buy you some time while you get your staging site search engine unoptimized.

Bing has a similar feature, called the Block URLs tool. However, this is even more temporary, as Bing’s blocked URLs expire after 90 days.

Use HTTP Authentication

One of the most secure ways to keep staging hidden from both crawlers and curious site visitors is by setting up HTTP Authentication. This way, users (or bots) won’t be able to access any page without providing a password. Earlier, we talked about how some crawlers ignore robots.txt. This measure will reliably keep them away.

You can quickly do this within your nginx config, with the apache2-utils package. By adding auth_basic to your root domain’s location, you can require all staging visitors to sign in:

location / {

try_files $uri $uri/ =404;

auth_basic "Authorization required";

auth_basic_user_file /etc/nginx/.htpasswd;

}

The biggest downside here is that it adds a bit more friction for your developers and automated tests, since they’ll always need a password file on hand.

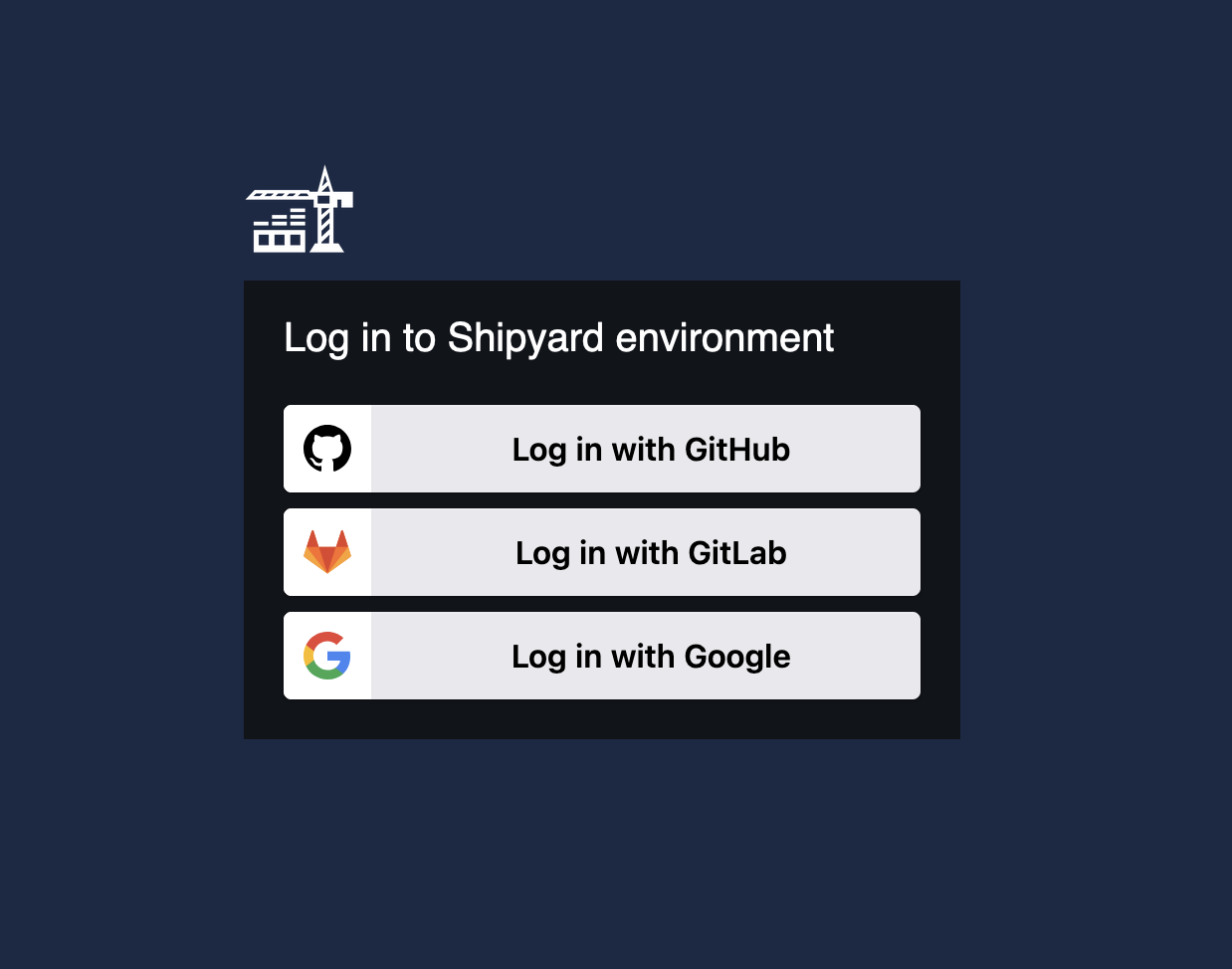

You can also set up authentication at the application level, by requiring login auth to access the staging site. Or if you use Shipyard, all environments default to having an SSO gateway, and users get a visitor management portal.

Staging goes incognito

You’ve probably seen this problem in the wild: when was the last time you tried to purchase/reference/find something and ended up on someone’s QA domain? Anyone who has maintained a pre-production environment knows that this is not a good look — you risk users seeing a less complete version of your site, possibly awaiting review. And you definitely don’t want your staging site to compete in search results with your main site.

If you take some combination of the measures above, you can help your staging environment fly under the search engine indexing radar. It might take time for some of these steps to take effect, since search engine crawls aren’t terribly frequent. You can check to see if these steps are working by looking at your Google Search Console data, and seeing how many staging/QA/test environment visits are happening from organic search.