End-to-end (E2E) tests are extremely useful for automating common user workflows, and they remove a solid chunk of manual QA lift. Claude Code, when given the right direction, can help write tests to interact with your features and make sure there aren’t any obvious bugs in your app. As a bonus, Claude can analyze test results and use those to fix/improve your features. This is another valuable addition to the agentic development loop, and can help you get Claude course-corrected without manually checking your live application.

Gearing up for E2E testing

Now that I’ve used Claude Code to build a full-stack app, I want to generate a series of E2E tests that can run against every new commit by means of the CI/CD pipeline. Using Claude Code for feature development is convenient, yet unpredictable (it takes specific + thorough prompting to get high-quality, working features). This means we want to quickly check new code changes to see if they’re exhibiting the behavior that we want. Claude Code itself can’t verify feature “correctness” (since it can’t visit/interact with the app, aside from making HTML fetch requests), but that doesn’t prevent it from guessing.

E2E tests are typically written in Playwright or Cypress. I’m going to ask Claude to write this batch in Cypress, since I’m more familiar with the syntax and config, which will be helpful when I need to debug Claude’s output.

While we write the tests, we’ll want a copy of the app running locally so we can run them frequently and analyze their logs. However, once they’re written and working, we’ll want to run our app in a remote test environment so we can offload hosting/testing from our local machine.

Trial and error in agentic test-writing

The first time I attempted to write E2E tests with Claude Code, I described a feature’s intended behavior, asked Claude to perform a fetch request, read the code itself, and write a test to fit it. This approach needed some fine-tuning. It took really dense, context-filled prompts to get tests that would even fail against working features. Of course, with LLM-driven development, you need plenty of context to get quality output. However, prompting for the tests ended up being disproportionately more difficult than prompting for the initial feature.

I decided to try a new approach, particularly one that is generally successful for human developers: test-driven development. This ended up being much more successful, and Claude handled the feature-test pairing beautifully.

Using Claude Code for Test-Driven Development (TDD)

I wanted to experiment with a test-driven development (TDD) workflow. TDD is a process where you’ll write tests for a feature before writing the feature itself. The tests are expected to fail at first, and the feature is in development until the tests are green.

I tested this process out by telling Claude the workflow/process that I expected to use:

Let's use test-driven development for this next feature. This means we are going to write tests before feature development, and design the tests to outline expected behavior for new features. The tests are initially expected to fail, but once we build out the MVP feature, they will pass. Later, we'll refactor our feature's code.

In this instance, let's focus on writing the tests only. We'll start with user stories and write tests to match them, based on what you know about the recipe app. To grab context, you can make fetch requests to the app and its endpoints.

Claude interpreted this pretty well, but started writing tests right off the bat with this prompt. I followed up with a prompt letting it know that I intend to specify my own user stories and features:

Let's keep this prompt in mind for context on how our TDD workflow will play out, and not generate anything yet!

Adding a new feature with TDD

In the last post, I used Claude Code to help me write a recipe database app. It’s a simple CRUD app with a React, Django, and Postgres stack, using Material Design 3 for the styling. Users can create and store their favorite recipes, sorted by category.

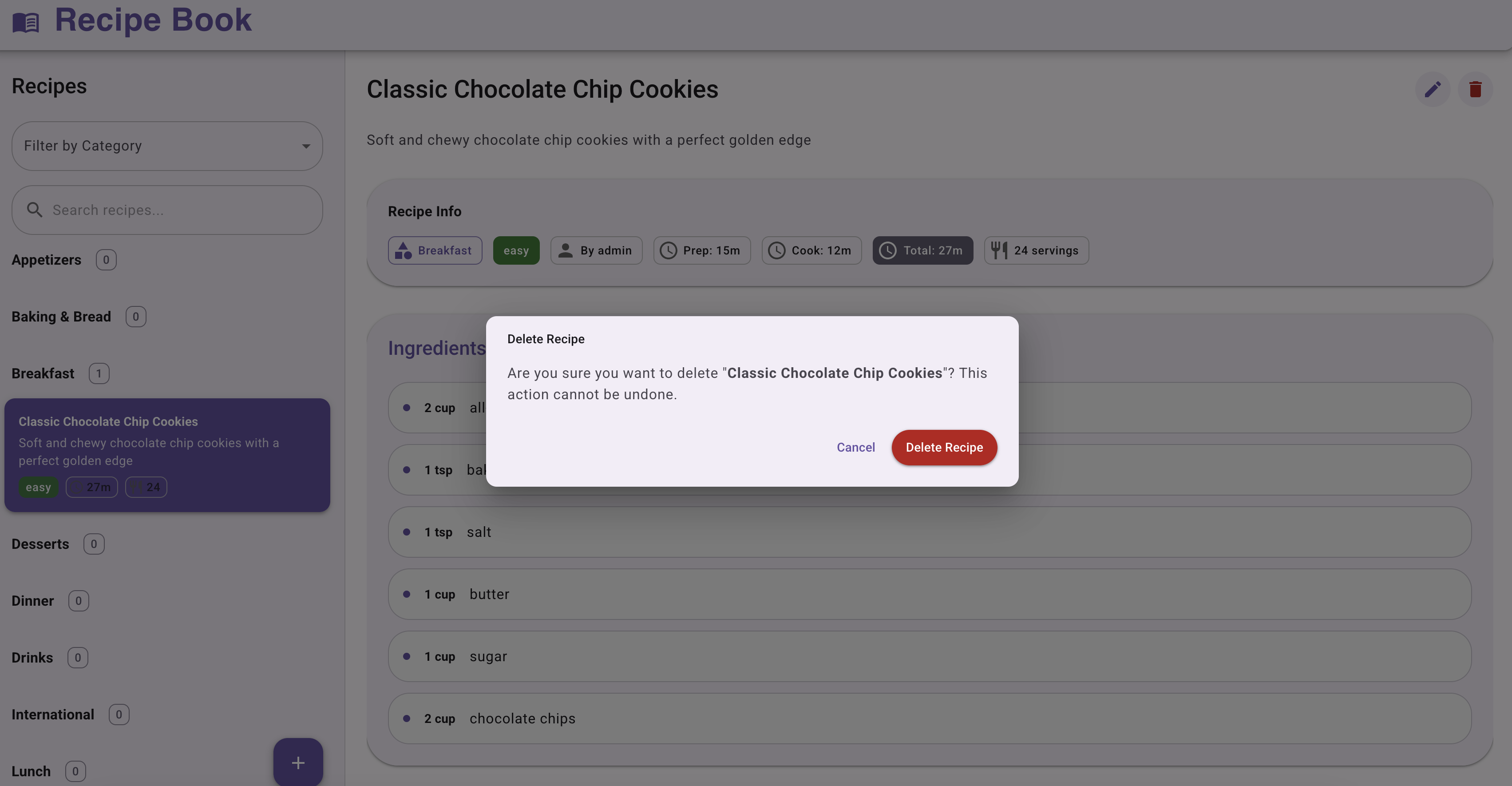

This app was missing a feature that was making using it (and testing it) a little subpar: the delete function. As a user, I’d like to delete recipes that I no longer want in my library. I tested out TDD principles to accomplish this.

Writing tests for the feature

I started off by reiterating the user story for the delete function. I added some more concrete guidelines, so that Claude knew what exactly to cover with this prompt.

As a user, I want to delete recipes that I no longer need in my recipe library.

For this recipe app, I want to write an end-to-end test that tests a potential "delete" feature. I want the test to interact with not-yet-existant UI features to simulate a user workflow, and then confirm after that the element in question is gone.

The test should select an existing recipe to open the detailed view, click the trash can icon / delete button, and confirm the exact recipe no longer exists in the sidebar.

Remember: do not write the feature yet, only the Cypress test!

Claude broke this down into four separate tests:

- Deleting a recipe

- Canceling a delete request

- Handling deletion errors

- Ignoring delete requests for non-user-owned recipes

Following my directions on how to use TDD, Claude ran the tests and confirmed that all four failed.

Writing the feature

Now we can write the feature to match our end-to-end tests. Since most of the logic has been outlined in the tests, Claude will have solid context on what to work off of. Just like human-driven TDD, the feature-writing ends up being the simpler part. I wanted to see how well Claude could work off of the tests themselves, so I gave it a more condensed prompt here:

Let's take the new E2E tests and use them to build a delete feature, using the TDD process.

We can start with the UI button on the recipe view, make sure it deletes from the database, and remove the recipe from sidebar. We can assess correctness by seeing whether our tests pass.

The results were solid! After using Claude to create a few distinct features with varying success, the TDD approach ended up working best. Claude went back and made some small edits to the tests, to match the direction that the feature took. Some quick user testing proved that the feature wasn’t outright buggy, and the accompanying E2E tests passed on the first run.

Testing against ephemeral test environments

In our previous Claude Code article, we talked about hosting your Claude app in a Kubernetes preview environment. Here’s why this is helpful during development:

- You can see how your code changes fare when deployed to production-like infrastructure

- Your teammates and stakeholders can access a live preview of your WIP app/feature/PR and provide feedback

- You can run your full test suite against your app, alongside your real services, data, and integrations

To get the most value out of our Claude Code dev/test loop, we can host the app in a remote environment and view every new commit live.

Configuring the app to run on Shipyard

Claude can get our Shipyard configuration ready, which in this case just means adding a few Docker Compose labels to set up routing. I used this prompt to point Claude to the Shipyard docs and give a general idea of the process:

I want to run this app on Shipyard. In order to do so, my services need Shipyard routes configured in the Docker Compose file. We'll want one for the frontend, and one for the API, using the correct filepath. Here's info on adding Compose labels: https://docs.shipyard.build/docker-compose#shipyardroute

We also want to make sure our Cypress tests are running against Shipyard, instead of localhost. Conveniently, Shipyard injects a SHIPYARD_DOMAIN env var by default, so we can visit that to get the dynamic environment URL. I prompted Claude with this:

Let's make the Cypress tests use the SHIPYARD_DOMAIN env var as our Cypress base URL. This is injected at build time and at runtime, so it will be available to use when the environment is up.

Configuring your CI/CD pipeline

Instead of asking Claude to run my Cypress tests every time I made a new commit, I wanted to automate this simple process via a short GitHub Actions CI/CD pipeline. I gave Claude this prompt:

Let's write a GitHub Actions YAML that has a simple workflow: on every new feature push, it should run the Cypress test suite against the Shipyard URL. Let's do this via the following steps:

- Create the YAML file in .github/workflows/

- Initialize the GitHub Actions file to execute on every push

- Run the Checkout v4 Action

- Run the Cypress command against the Shipyard base url

While writing/modifying the Cypress tests, it still made sense to run them initially with Claude, so that it could interpret any failures and error messages, and use those to guide it to fix things. The pipeline itself is intended for testing new app-related commits after all, once the Cypress tests are finalized.

Since Claude is committing on our behalf, the pipeline will automatically trigger once Claude pushes any commit to the repo. This lets us rely on Claude only for our development workflows and less for any repeatable, pipeline-based tasks.

E2E test early and often with Shipyard

E2E tests automate repetitive actions, often otherwise done via user testing. They help you confirm that real user workflows hold up every time you make a commit. Doing this in an agentic development loop helps feed the LLM context exactly on how/why the app fails, since most agents can’t directly interact with your live app.

When you need production-like infrastructure to test against, you’ll want something that builds/rebuilds fast, and can integrate with your CI/CD pipeline. That’s where Shipyard comes in. Whenever your agent makes a commit, Shipyard rebuilds your production-like test environment in minutes. Your CI/CD or agent then runs your E2E tests against that environment, giving you the output that you need to correct/improve the feature in question.

Using Claude Code? Try Shipyard to complete your agentic development loop.