The Docker ecosystem has grown quite a bit since Docker Engine launched in 2013 — it now includes tooling and frameworks to serve almost every aspect of container-based development and deployment. Here’s a brief glossary of the most important Docker services and how they fit into modern-day development workflows.

Docker Engine

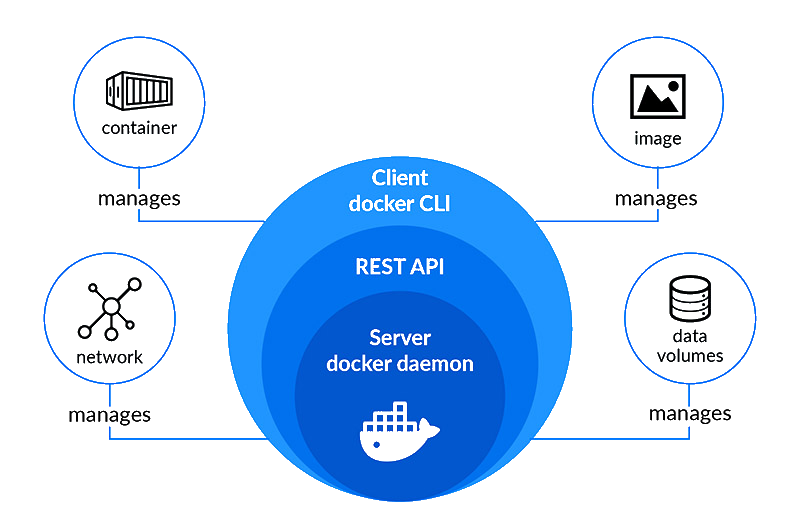

Docker Engine is the open source core of the Docker container platform that allows users to build and containerize their applications via Dockerfile definitions. It’s a client-side application with three main components:

- the server running the

dockerddaemon process - APIs for external applications to communicate with

dockerd - the

dockerCLI client

Docker Engine development has migrated to the Moby Project, an open source framework maintained by the Docker team. Before the migration in 2020, Docker Engine was a maintained fork of the Moby Project that featured select components from it.

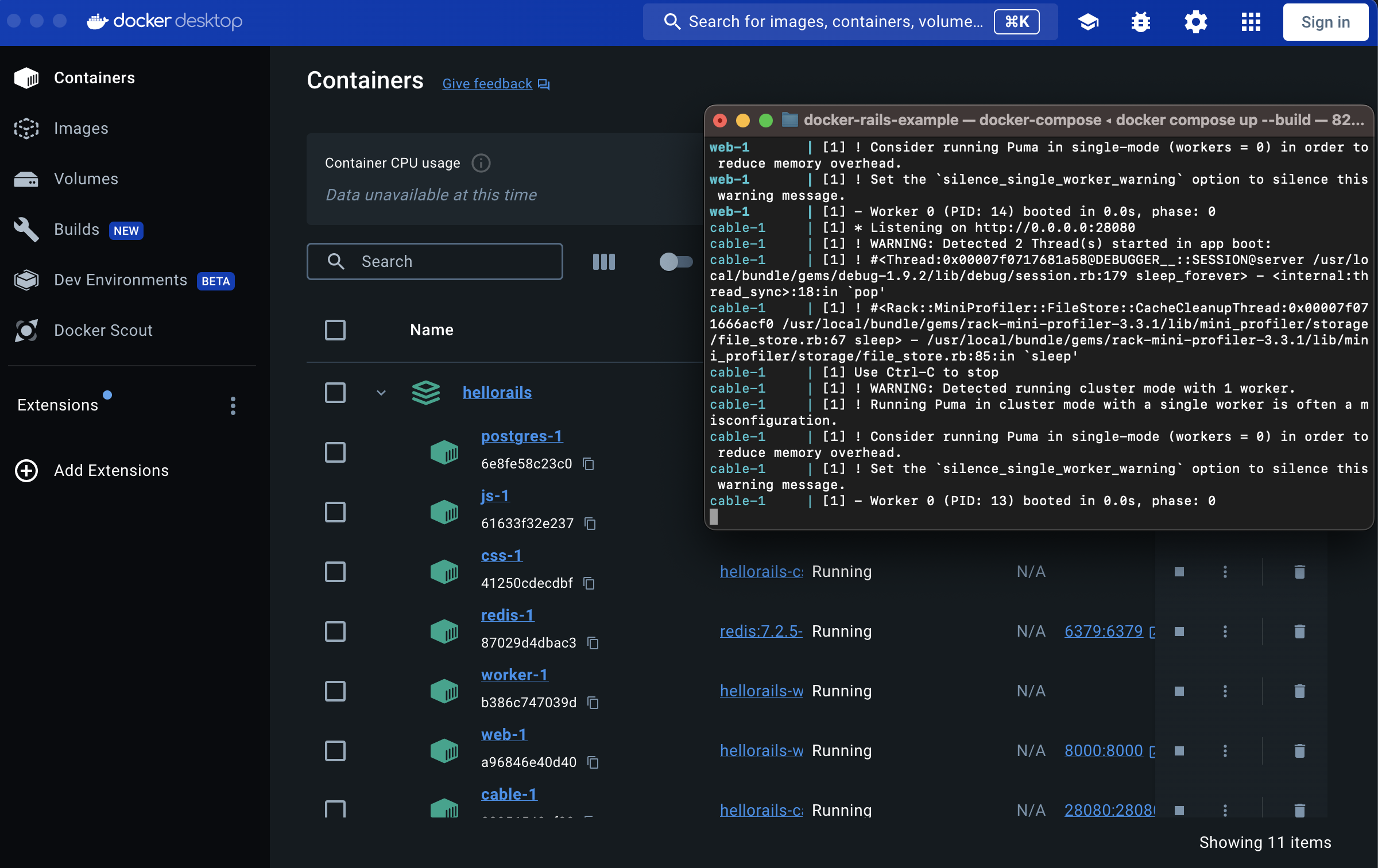

Docker Desktop

Docker Desktop is an all-in-one Docker application for Mac, Linux, and Windows. It bundles a few key Docker functionalities together, and its GUI adds a visual component to Docker development workflows.

One of the major conveniences of Docker Desktop is that it handles installations and upgrades for its bundled Docker tools, including Compose, Docker Engine, and Docker Scout.

Docker Desktop’s tabs help navigate and manage existing containers, builds, volumes, and images. Using Docker Desktop for managing apps really comes down to a matter of personal preference: users can accomplish many of the same things with Docker’s CLI, but some might prefer the visual context that the GUI provides.

Docker Compose

Docker Compose is an orchestration tool for single and multi-container Dockerized applications. It allows users to define and configure one or more services and their necessary options, volumes, and networks. Compose will then handle launch and orchestration of the application with the docker compose up command.

Using a compose.yml file is highly preferable to copy/pasting lengthy Docker build and run commands with their accompanying flags and options. Here’s a sample Compose file for an application with five services:

version: '3'

services:

frontend:

labels:

shipyard.route: '/'

build: 'frontend'

env_file:

- frontend/frontend.env

volumes:

- './frontend/src:/app/src'

- './frontend/public:/app/public'

ports:

- '3000:3000'

backend:

labels:

shipyard.route: '/api'

build: 'backend'

environment:

DATABASE_URL: 'postgres://obscure-user:obscure-password@postgres/app'

DEV: ${DEV}

FLASK_DEBUG: '1'

volumes:

- './backend/filesystem/entrypoints:/entrypoints:ro'

- './backend/migrations:/srv/migrations'

- './backend/src:/srv/src:ro'

ports:

- '8080:8080'

worker:

labels:

shipyard.init: 'poetry run flask db upgrade'

build: 'backend'

environment:

DATABASE_URL: 'postgres://obscure-user:obscure-password@postgres/app'

DEV: ${DEV}

FLASK_DEBUG: '1'

command: '/entrypoints/worker.sh'

volumes:

- './backend/filesystem/entrypoints:/entrypoints:ro'

- './backend/migrations:/srv/migrations'

- './backend/src:/srv/src:ro'

postgres:

image: 'postgres:9.6-alpine'

environment:

POSTGRES_USER: 'obscure-user'

POSTGRES_PASSWORD: 'obscure-password'

POSTGRES_DB: 'app'

PGDATA: '/var/lib/postgresql/data/pgdata'

volumes:

- 'postgres:/var/lib/postgresql/data'

ports:

- '5432'

redis:

image: 'redis:5.0-alpine'

ports:

- '6379'

volumes:

postgres:

Compose is a best practice for local development with Docker: it offers easy setup and lightweight orchestration for apps at almost any scale.

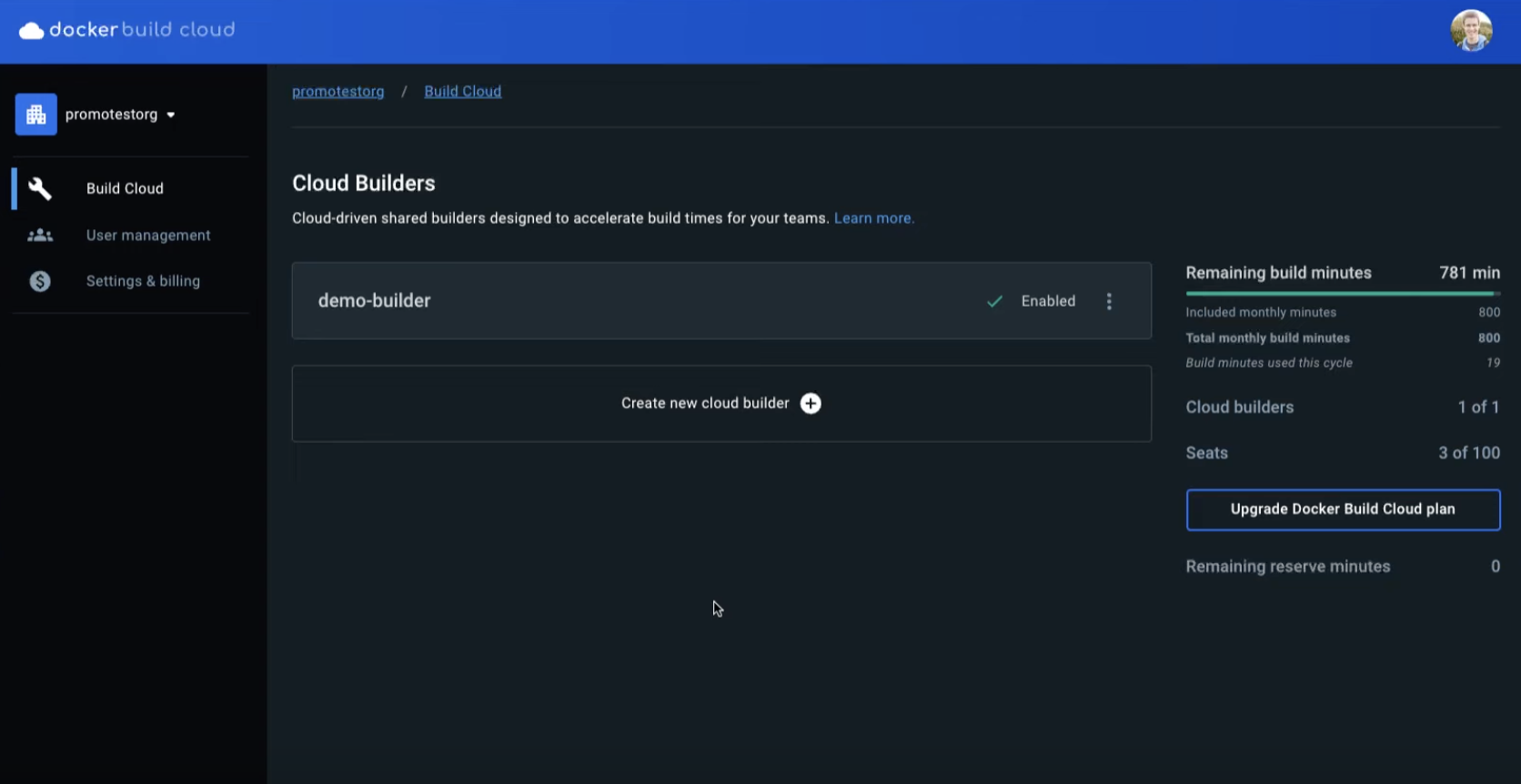

Docker Build Cloud

Docker Build Cloud allows Docker users to outsource their builds to the cloud for faster execution. Locally, builds are highly dependent on your machine’s specs, and consume valuable compute that you might need for other resource-intensive development tasks. Build Cloud offloads image building from your machine, handling builds an order of magnitude faster and in parallel.

Another major technical benefit of Build Cloud is its shared cache — builds across your team execute faster because identical artifacts are reused, going one step further than Docker’s image layer caching.

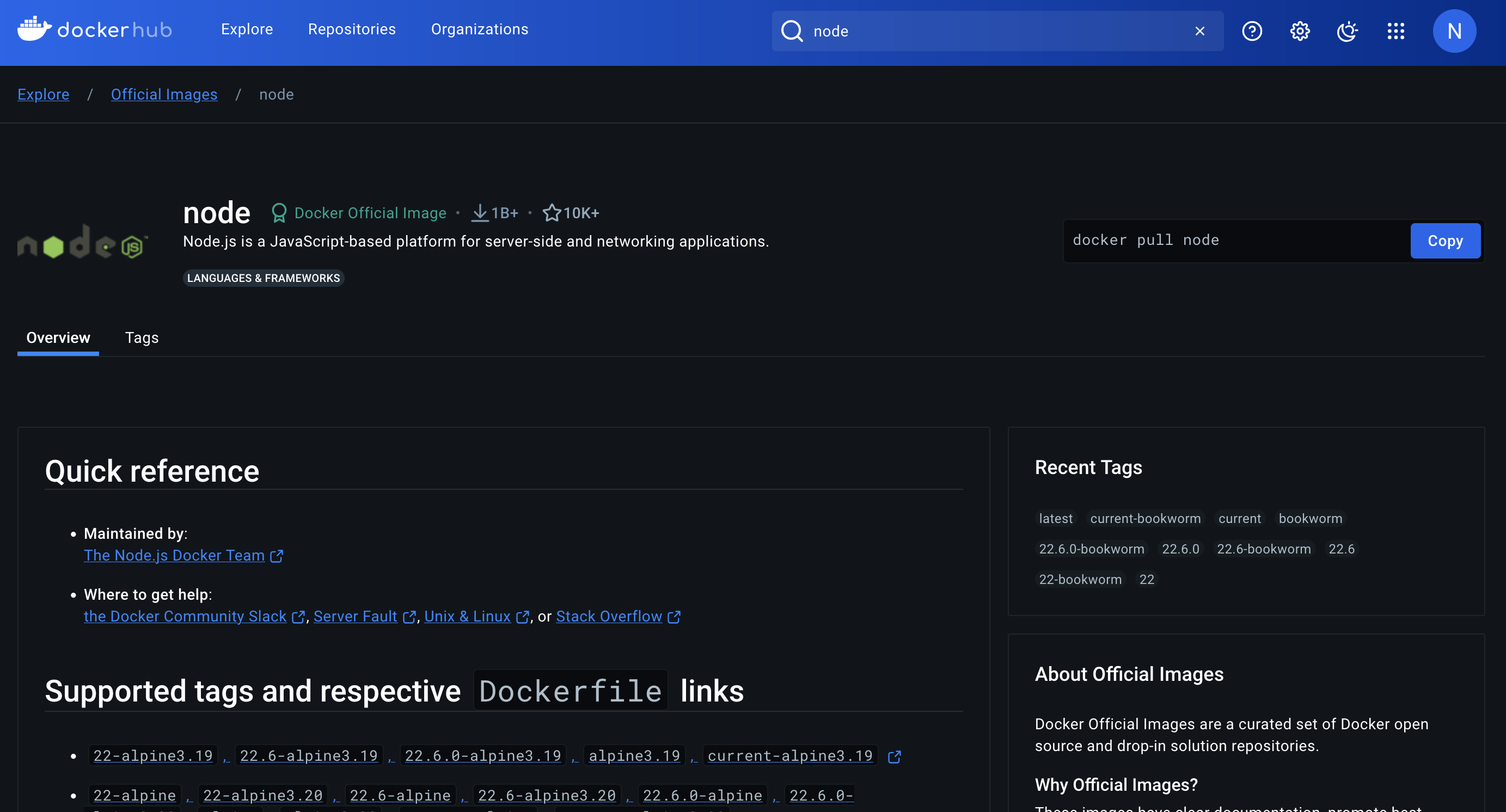

Docker Hub

Docker Hub is Docker’s container registry. It’s a remote, cloud-based service where users can push and pull Docker images for personal or public use.

You can push and pull from Docker Hub using the Docker CLI. For example, you can pull the Node.js image from Docker Hub (pictured above) to your local machine by going to your terminal and running docker pull node.

Teams might use Docker Hub to distribute images for development/testing/reference, or to release the latest version to their user base. Images are version controlled with tags, allowing users to specify the exact image version they need, or omitting tags to get the latest release.

Docker Hub’s repositories hold a group of related images, distinguished by tag.

Docker Scout

Docker Scout is a service for Docker image vulnerability scanning. It analyzes an image’s dependencies and provides helpful insights/analytics for software supply chain security. Scout references an attached SBOM (software bill of materials) but also has the capability to generate one from the provided image.

Here’s an example from the Scout docs for scanning an image’s compliance status:

$ docker scout quickview

...

Policy status FAILED (2/6 policies met, 2 missing data)

Status │ Policy │ Results

─────────┼──────────────────────────────────────────────┼──────────────────────────────

✓ │ No copyleft licenses │ 0 packages

! │ Default non-root user │

! │ No fixable critical or high vulnerabilities │ 2C 16H 0M 0L

✓ │ No high-profile vulnerabilities │ 0C 0H 0M 0L

? │ No outdated base images │ No data

? │ Supply chain attestations │ No data

Scout can be used for free on a limited number of repositories, or purchased through one’s org for use with more repositories.

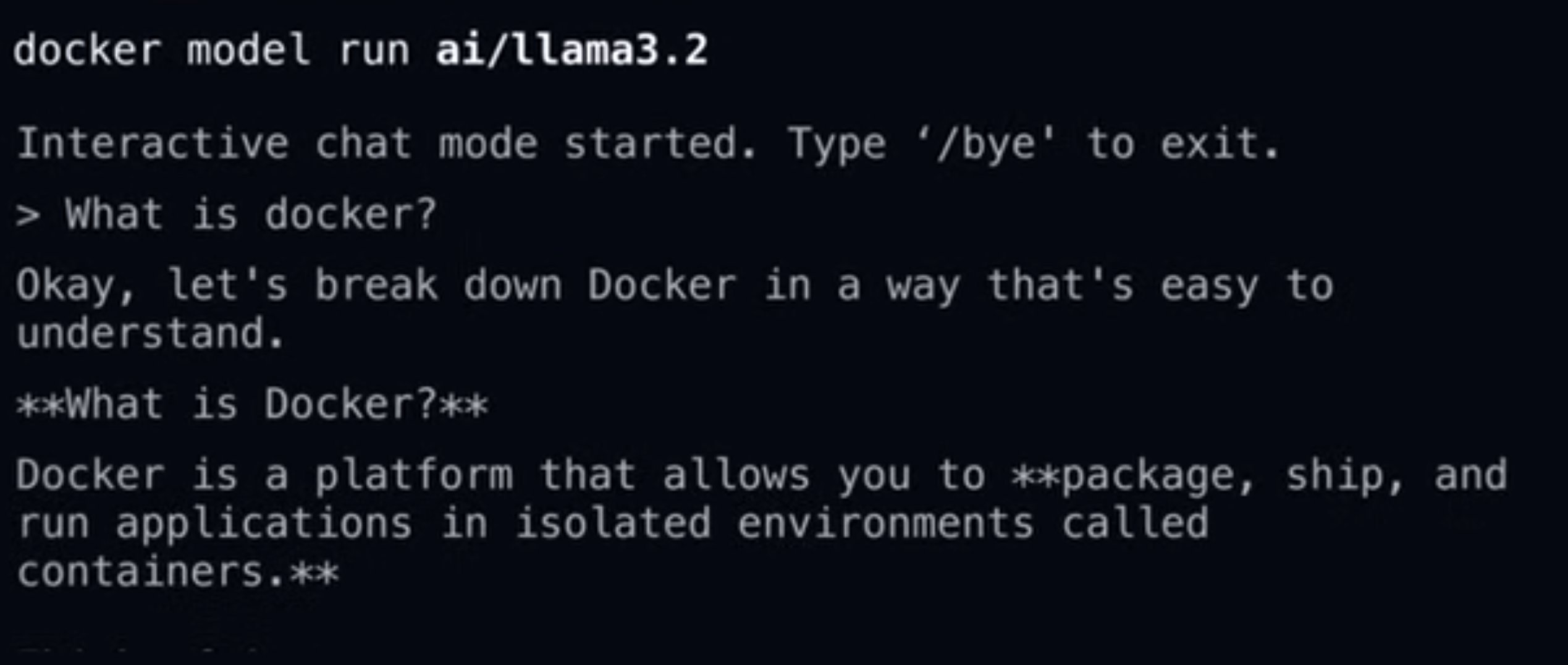

Docker Model Runner

Docker Model Runner is Docker’s new tool for managing and deploying AI models. It allows developers to pull models from Docker Hub, OCI registries, or Hugging Face, then serve them locally. If you’re looking for a way to use local models with Cursor or Continue, Model Runner is an easy and solid option.

Docker Model Runner supports multiple inference engines: llama.cpp, vLLM, and Diffusers. Models load into memory only when needed, and they unload automatically to save resources (important for local development). Users can interact with their models via Docker Desktop or CLI.

Docker Sandboxes

Docker Sandboxes let you run AI coding agents on your local machine within isolated microVMs. Each sandbox has its own Docker daemon. This is an important security measure to take when using coding agents, as they can execute risky commands or install insecure packages on your system.

You can create a sandbox for Claude Code by running:

docker sandbox run claude ~/my-project

Then exec into it:

docker sandbox exec -it <sandbox-name> bash

Sandboxes support file sharing between the host and sandbox directories, as well as network access controls. You can run multiple concurrent sandboxes for multi-agent setups.

Beyond the Dockerverse

Docker has spent the last decade giving developers the tooling they need for nearly every aspect of containerized development. But what happens after your features leave your local development environment? Shipyard takes your Dockerized app, and transpiles your Docker Compose file to Kubernetes manifests for full-stack ephemeral environments on every PR. This way, you’ll get the production-like performance you need without focusing on the underlying infrastructure.